//+------------------------------------------------------------------+

//| MLP_xor.mq4 |

//| Copyright © 2008, MetaQuotes Software Corp. |

//| http://www.metaquotes.net |

//+------------------------------------------------------------------+

#property copyright "Copyright © 2008, MetaQuotes Software Corp."

#property link "http://www.metaquotes.net"

/* This program implements a simple Multi Layer Perceptron to solve the xor problem (classification)

Adapted by Sebastien Marcel (2003-2004) from D. Collobert

The goal is to learn the XOR table of truth:

IN | OUT

|

0 0 | 0

0 1 | 1

1 0 | 1

1 1 | 0

The networks is the following:

w10--

--

-->

x1 -- w11 --> (hidden 1) -

- .> -- w31

w21-- .. --->

-. output

w12.. -- --->

. --> -- w32 /|\

x2 .. w22 ..> (hidden 2) - |

--> |

-- |

w20-- w30

x1, x2 are the inputs

wij the weights

*/

//#define PI 3.14159265358979323846

//+------------------------------------------------------------------+

//| script program start function |

//+------------------------------------------------------------------+

int start()

{

//----

initrand();

main();

//----

return(0);

}

//+------------------------------------------------------------------+

#define RAND_MAX 32767.0

//use this first function to seed the random number generator,

//call this before any of the other functions

void initrand()

{

//srand((unsigned)(time(0)));

MathSrand(TimeLocal());

}

//generates a psuedo-random double between 0.0 and 0.999...

double randdouble0()

{

return (MathRand()/((RAND_MAX)+1));

}

//generates a psuedo-random double between min and max

double randdouble2(double min, double max)

{

if (min>max)

{

return (randdouble0()*(min-max)+max);

}

else

{

return (randdouble0()*(max-min)+min);

}

}

// Random generator

double Random(double inf, double sup)

{

return(randdouble2(inf,sup));

}

//tanh(x) = sinh(x)/cosh(x) = (e^x - e^-x)/(e^x + e^-x)

double MathTanh(double x)

{

double exp;

if(x>0) {exp=MathExp(-2*x);return ((1-exp)/(1+exp));}

else {exp=MathExp(2*x);return ((exp-1)/(1+exp));}

}

// Transfer function

double f(double x)

{

return (MathTanh(x / 2.0));

}

// Derivative of transfer function

double f_prime(double x)

{

return ((1.0 - x * x) * 0.5);

}

int main()

{

// inputs

double x1, x2;

// target

double y;

double target0;

double target1;

// integration

double a1, a2, a3;

// outputs

double y1, y2, y3;

// weights

double w11, w12, w21, w22, w10, w20, w31, w32, w30;

// gradients

double Y1, Y2, Y3;

// training parameters

int T = 1000; // maximum number of iterations

double mse_min = 0.1; // minimum MSE

double lambda = 0.1; // learning rate

//*****************************

// Initialize weights

//

double bound = 1.0 / MathSqrt(2);

w11 = Random(-bound, bound);

w12 = Random(-bound, bound);

w10 = Random(-bound, bound);

w21 = Random(-bound, bound);

w22 = Random(-bound, bound);

w20 = Random(-bound, bound);

w31 = Random(-bound, bound);

w32 = Random(-bound, bound);

w30 = Random(-bound, bound);

//*****************************

// targets for tanh tranfert function

//

target0 = -0.6;

target1 = 0.6;

//*****************************

// Print info on the MLP

//

Print("\n");

Print("Bound = ", bound,"\n");

Print("Initial weights:\n");

Print(" hidden neuron 1: ", w11," ", w12," ", w10,"\n");

Print(" hidden neuron 2: ", w21," ", w22," ", w20,"\n");

Print(" output neuron: ", w31," ", w32," ", w30,"\n");

//*****************************

// Print outputs of the MLP

//

Print("\n");

Print("MLP outputs:\n");

// Example 1: x = {1, 1} y = 1

x1 = 1.0;

x2 = 1.0;

y = target1;

a1 = w11 * x1 + w12 * x2 - w10;

y1 = f(a1);

a2 = w21 * x1 + w22 * x2 - w20;

y2 = f(a2);

a3 = w31 * y1 + w32 * y2 - w30;

y3 = f(a3);

Print(" MLP(",x1,",",x2,")=",y3," ",y,"\n");

// Example 2: x = {1, 1} y = 1

x1 = 1.0;

x2 = -1.0;

y = target0;

a1 = w11 * x1 + w12 * x2 - w10;

y1 = f(a1);

a2 = w21 * x1 + w22 * x2 - w20;

y2 = f(a2);

a3 = w31 * y1 + w32 * y2 - w30;

y3 = f(a3);

Print(" MLP(",x1,",",x2,")=",y3," ",y,"\n");

// Example 3: x = {1, 1} y = 1

x1 = -1.0;

x2 = 1.0;

y = target0;

a1 = w11 * x1 + w12 * x2 - w10;

y1 = f(a1);

a2 = w21 * x1 + w22 * x2 - w20;

y2 = f(a2);

a3 = w31 * y1 + w32 * y2 - w30;

y3 = f(a3);

Print(" MLP(",x1,",",x2,")=",y3," ",y,"\n");

// Example 4: x = {1, 1} y = 1

x1 = -1.0;

x2 = -1.0;

y = target1;

a1 = w11 * x1 + w12 * x2 - w10;

y1 = f(a1);

a2 = w21 * x1 + w22 * x2 - w20;

y2 = f(a2);

a3 = w31 * y1 + w32 * y2 - w30;

y3 = f(a3);

Print(" MLP(",x1,",",x2,")=",y3," ",y,"\n");

//*****************************

// Train the MLP

//

Print("\nStochastic gradient training:\n");

int t; // the current iteration

double mse; // the current MSE

//

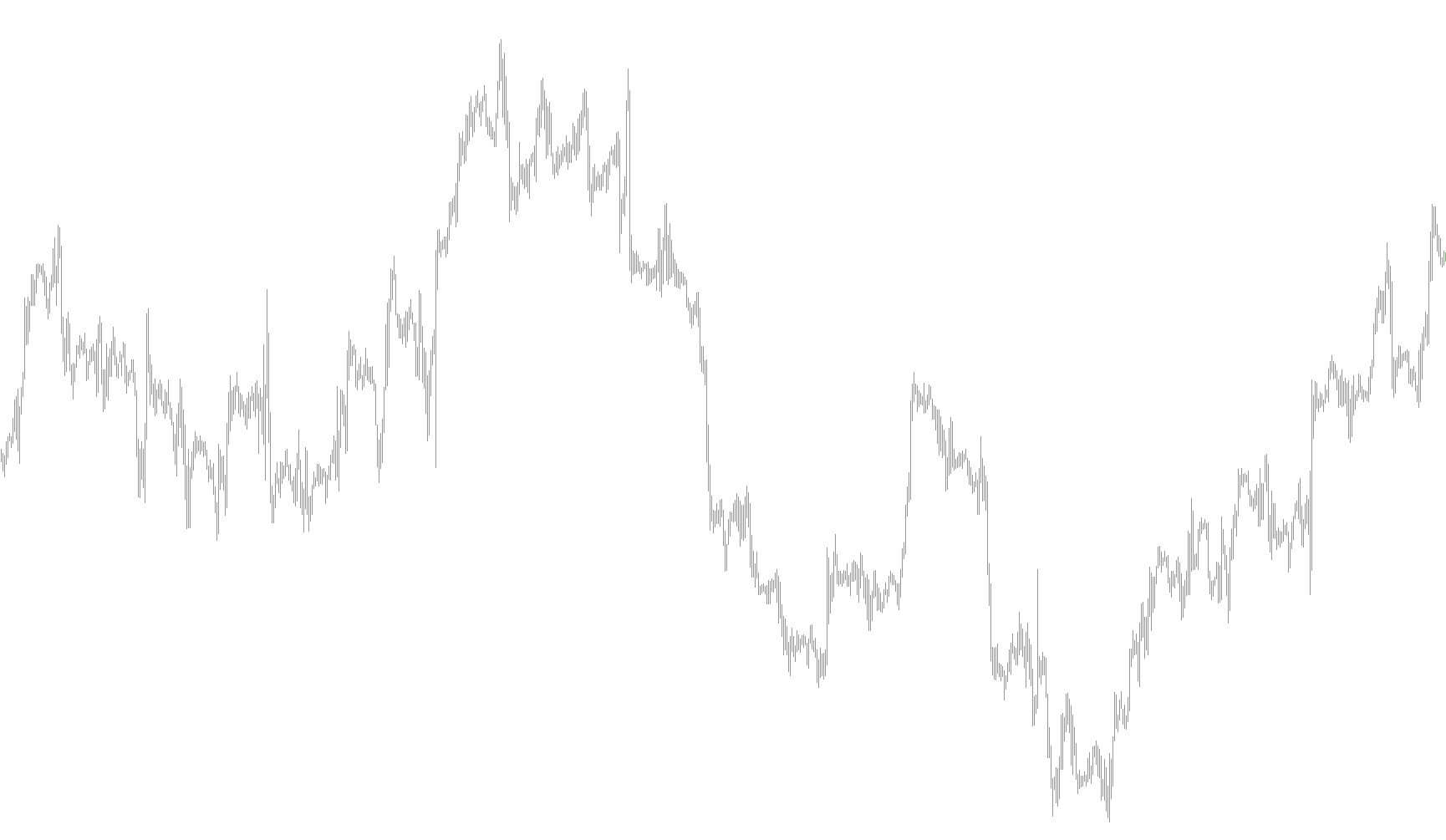

int pf =FileOpen("mse.txt",FILE_CSV|FILE_WRITE,' ');//= fopen("mse.txt", "w");

for(t = 1; t <= T ; t++)

{

mse = 0.0;

//*****************************

//

// Example 1: x = {1, 1} y = 1

x1 = 1.0;

x2 = 1.0;

y = target1;

// Forward

a1 = w11 * x1 + w12 * x2 - w10;

y1 = f(a1);

a2 = w21 * x1 + w22 * x2 - w20;

y2 = f(a2);

a3 = w31 * y1 + w32 * y2 - w30;

y3 = f(a3);

// Backward

Y3 = (y3 - y) * f_prime(y3);

Y1 = f_prime(y1) * Y3 * w31;

Y2 = f_prime(y2) * Y3 * w32;

// Update weights

w11 = w11 - lambda * x1 * Y1;

w12 = w12 - lambda * x2 * Y1;

w10 = w10 + lambda * Y1;

w21 = w21 - lambda * x1 * Y2;

w22 = w22 - lambda * x2 * Y2;

w20 = w20 + lambda * Y2;

w31 = w31 - lambda * y1 * Y3;

w32 = w32 - lambda * y2 * Y3;

w30 = w30 + lambda * Y3;

// Compute MSE

mse = mse + 0.5 * (y3 - y) * (y3 - y);

//*****************************

//

// Example 2: x = {1, -1} y = -1

x1 = 1.0;

x2 = -1.0;

y = target0;

// Forward

a1 = w11 * x1 + w12 * x2 - w10;

y1 = f(a1);

a2 = w21 * x1 + w22 * x2 - w20;

y2 = f(a2);

a3 = w31 * y1 + w32 * y2 - w30;

y3 = f(a3);

// Backward

Y3 = (y3 - y) * f_prime(y3);

Y1 = f_prime(y1) * Y3 * w31;

Y2 = f_prime(y2) * Y3 * w32;

// Update weights

w11 = w11 - lambda * x1 * Y1;

w12 = w12 - lambda * x2 * Y1;

w10 = w10 + lambda * Y1;

w21 = w21 - lambda * x1 * Y2;

w22 = w22 - lambda * x2 * Y2;

w20 = w20 + lambda * Y2;

w31 = w31 - lambda * y1 * Y3;

w32 = w32 - lambda * y2 * Y3;

w30 = w30 + lambda * Y3;

// Compute MSE

mse = mse + 0.5 * (y3 - y) * (y3 - y);

//*****************************

//

// Example 3: x = {-1, 1} y = -1

x1 = -1.0;

x2 = 1.0;

y = target0;

// Forward

a1 = w11 * x1 + w12 * x2 - w10;

y1 = f(a1);

a2 = w21 * x1 + w22 * x2 - w20;

y2 = f(a2);

a3 = w31 * y1 + w32 * y2 - w30;

y3 = f(a3);

// Backward

Y3 = (y3 - y) * f_prime(y3);

Y1 = f_prime(y1) * Y3 * w31;

Y2 = f_prime(y2) * Y3 * w32;

// Update weigths

w11 = w11 - lambda * x1 * Y1;

w12 = w12 - lambda * x2 * Y1;

w10 = w10 + lambda * Y1;

w21 = w21 - lambda * x1 * Y2;

w22 = w22 - lambda * x2 * Y2;

w20 = w20 + lambda * Y2;

w31 = w31 - lambda * y1 * Y3;

w32 = w32 - lambda * y2 * Y3;

w30 = w30 + lambda * Y3;

// Compute MSE

mse = mse + 0.5 * (y3 - y) * (y3 - y);

//*****************************

//

// Example 4: x = {-1, -1} y = 1

x1 = -1.0;

x2 = -1.0;

y = target1;

// Forward

a1 = w11 * x1 + w12 * x2 - w10;

y1 = f(a1);

a2 = w21 * x1 + w22 * x2 - w20;

y2 = f(a2);

a3 = w31 * y1 + w32 * y2 - w30;

y3 = f(a3);

// Backward

Y3 = (y3 - y) * f_prime(y3);

Y1 = f_prime(y1) * Y3 * w31;

Y2 = f_prime(y2) * Y3 * w32;

// Update weights

w11 = w11 - lambda * x1 * Y1;

w12 = w12 - lambda * x2 * Y1;

w10 = w10 + lambda * Y1;

w21 = w21 - lambda * x1 * Y2;

w22 = w22 - lambda * x2 * Y2;

w20 = w20 + lambda * Y2;

w31 = w31 - lambda * y1 * Y3;

w32 = w32 - lambda * y2 * Y3;

w30 = w30 + lambda * Y3;

// Compute MSE

mse = mse + 0.5 * (y3 - y) * (y3 - y);

//*****************************

//

// print the MSE in a file

FileWrite(pf,mse);//fprintf(pf, "%f\n", mse);

Print(".");

//fflush(stdout);

if(mse < mse_min) break;

}

Print("\n");

//

FileClose(pf);

//*****************************

// Print info about training

//

Print("Number of iterations =", t,"\n");

Print("Final MSE = ", mse,"\n");

//*****************************

// Print info about the MLP

//

Print("Final weights:\n");

Print(" hidden neuron 1:", w11," ", w12," ", w10,"\n");

Print(" hidden neuron 2:", w21," ", w22," ", w20,"\n");

Print(" output neuron: ", w31," ", w32," ", w30,"\n");

//*****************************

// Print outputs of the MLP

Print("\n");

Print("MLP outputs:\n");

// Example 1: x = {1, 1} y = 1

x1 = 1.0;

x2 = 1.0;

y = target1;

a1 = w11 * x1 + w12 * x2 - w10;

y1 = f(a1);

a2 = w21 * x1 + w22 * x2 - w20;

y2 = f(a2);

a3 = w31 * y1 + w32 * y2 - w30;

y3 = f(a3);

//printf(" MLP(%f, %f)=%f \t y=%f\n", x1, x2, y3, y);

Print(" MLP(",x1,",",x2,")=",y3," ",y,"\n");

// Example 2: x = {1, 1} y = 1

x1 = 1.0;

x2 = -1.0;

y = target0;

a1 = w11 * x1 + w12 * x2 - w10;

y1 = f(a1);

a2 = w21 * x1 + w22 * x2 - w20;

y2 = f(a2);

a3 = w31 * y1 + w32 * y2 - w30;

y3 = f(a3);

//printf(" MLP(%f, %f)=%f \t y=%f\n", x1, x2, y3, y);

Print(" MLP(",x1,",",x2,")=",y3," ",y,"\n");

// Example 3: x = {1, 1} y = 1

x1 = -1.0;

x2 = 1.0;

y = target0;

a1 = w11 * x1 + w12 * x2 - w10;

y1 = f(a1);

a2 = w21 * x1 + w22 * x2 - w20;

y2 = f(a2);

a3 = w31 * y1 + w32 * y2 - w30;

y3 = f(a3);

//printf(" MLP(%f, %f)=%f \t y=%f\n", x1, x2, y3, y);

Print(" MLP(",x1,",",x2,")=",y3," ",y,"\n");

// Example 4: x = {1, 1} y = 1

x1 = -1.0;

x2 = -1.0;

y = target1;

a1 = w11 * x1 + w12 * x2 - w10;

y1 = f(a1);

a2 = w21 * x1 + w22 * x2 - w20;

y2 = f(a2);

a3 = w31 * y1 + w32 * y2 - w30;

y3 = f(a3);

//printf(" MLP(%f, %f)=%f \t y=%f\n", x1, x2, y3, y);

Print(" MLP(",x1,",",x2,")=",y3," ",y,"\n");

Print("End of program reached !!\n");

return (0);

}

Comments